Nofima scientists are exploring how hyperspectral cameras and infrared light can improve the speed and accuracy of fish welfare assessments in aquaculture

With the rapid growth of global aquaculture over the last few decades, the management of fish welfare is of increasing concern. And for good reason: Farmed fish with higher welfare have better survival rates, fewer diseases and parasites and increase farm efficiency. Fish farmers who improve welfare conditions on their farms report less aggression, reduced fin damage, improved growth rates and improved feed conversion ratios.

Remaining competitive in a changing market is another factor. More consumers are considering animal welfare issues, seafood traceability and environmental, social and governance principles when making purchases, with research showing that some are willing to pay more to buy fish products with health and environmental labels. By improving fish welfare, farmers not only boost their efficiency but also their ability to sell products at a premium.

But there’s a snag: Measuring the health and happiness traits of a cultivated population totaling in the thousands can be tricky, with many current techniques falling short.

“Right now, there’s a lot of energy around trying to improve fish welfare,” said Evan Durland, a scientist with the breeding and genetics group at Norwegian food research institute Nofima. “Our tools to quantify fish welfare are limited, and a lot of them rely on human observations. So essentially, [it involves] pulling fish out of the water, looking at them and giving them a score.”

Observing aquatic animals underwater is difficult: Traditional techniques are time-consuming and rely heavily on the human eye or laboratory-based tools to detect welfare traits. This led Nofima scientists to launch DeepVision – a three-year interdisciplinary project to explore how technologies designed for land-based agriculture and food systems can be adapted to improve the assessment of numerous aspects of fish health. The project aims to “expand our toolkit for welfare assessments” and generate new ways to obtain that data but also “potentially entirely new traits that cannot be detected effectively with traditional methods.”

“We think that some technological tools available can improve on some of these approaches,” said Durland.

Lighting the way

By training computers to identify welfare traits, a greater number of samples can be processed in a shorter amount of time, with greater accuracy using cameras, sensors and computer algorithms to produce more reliable and quantifiable estimates of fish health. One such tool involves hyperspectral imagery – a technology that captures more wavelengths of light within the visible spectrum, as well as pushes the margins outside of it (UV, infrared).

“Hyperspectral cameras were developed by NASA back in the 1970s to put on satellites,” said Durland. “Unlike a normal camera, which takes color images composed of a red, green and a blue channel, hyperspectral cameras see a wider range of light. But more importantly, they have a lot more specificity within that range. A color image is three channels. Our hyperspectral cameras pick up 186, so just a lot more information there. So not only do we capture wavelengths a little outside the visible range of light, but we capture more distinct ‘colors’ within it.”

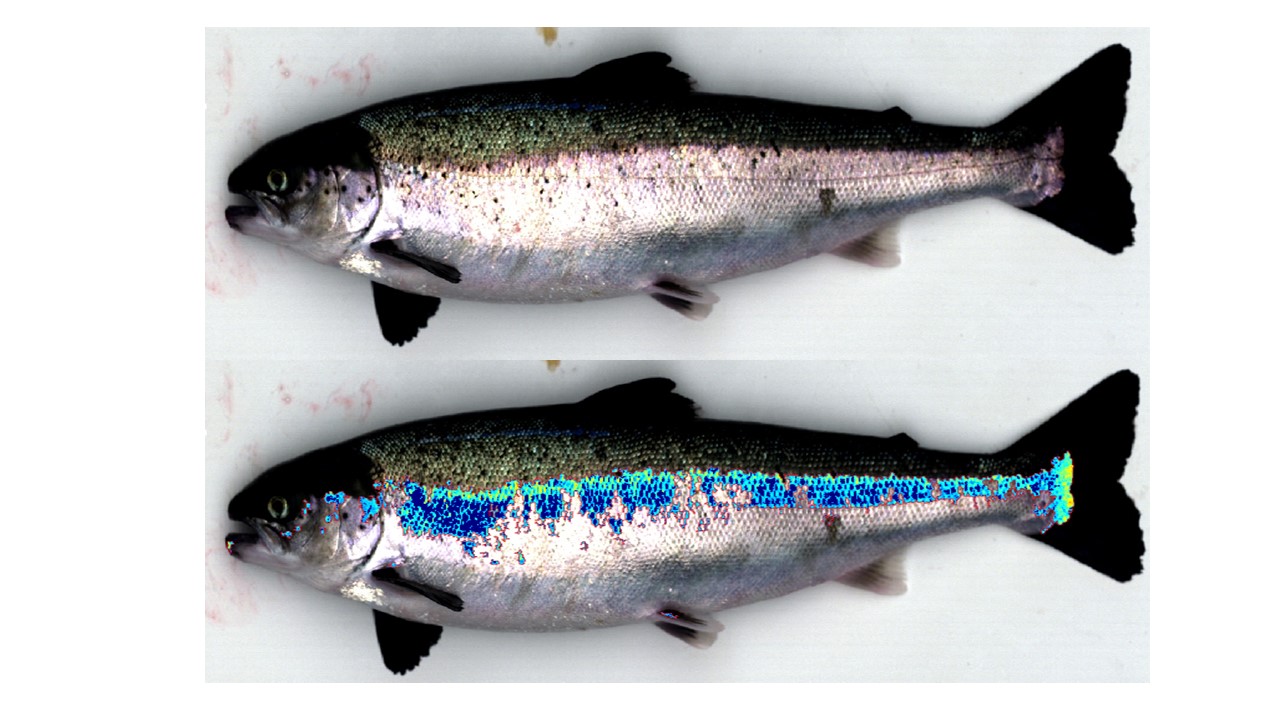

Nofima scientists have used these cameras to estimate blood on the fish fillets as a grading metric. Now, the research team is testing out the technology as a potential method of detecting wounds on salmon.

“We had already had these models for detecting blood in fish meat, and we adapted [it] to look for blood on whole live fish,” said Durland. “We can detect hemorrhaging on those fish with a much greater sensitivity than the human eye.”

Although the fish need to be pulled from the water and anesthetized to keep them still, the technique using the cameras is non-invasive, which makes it possible to perform analyses of the samples without handling the fish. It’s also fast: once the computer algorithms are trained, data can be obtained for an entire sample in a matter of seconds.

If the technique is successful, Durland said that it could be applied to commercial aquaculture and theorizes that the technology could also be expanded to be “a pre-check” before administering sea lice treatments.

“If they wanted to scan a hundred fish and get high precision phenotypes [to find out] their current status: are they healthy enough to give them a lice treatment?” said Durland. “We anticipate that that could be a good use for that tool.”

Sex and fat detection

Using these super cameras, Nofima scientists are also trialing a non-invasive technique to determine the sex of the European flat oyster (Ostrea edulis). One of the unique aspects of the flat oyster is that it changes sex between years, waffling back and forth between male and female. This creates unique challenges for breeders.

“For a breeding program, you really need to be able to tell which are males and females before you try and make some crosses,” said Durland. “Right now, the current method is to basically put 20 oysters in a bucket and hope that you have enough males in there to fertilize females. But if you knew sex ahead of time, it makes spawning much more efficient.”

The Nofima team is testing out whether hyperspectral cameras can help differentiate between male and female gonads.

“There’s a way to cheat and peek through the shell,” said Durland. “If we could create a good enough model and then adapt that to see through the shell, then you’d have a non-invasive way to [identify sex] before a spawning season.”

Infrared cameras also hold promise for fat detection in salmon and cod. For instance, the existing methods for estimating liver fat are either to examine the color in the field or conduct lipid extractions in the lab. The first approach is fast but lacks accuracy, and the second is time-consuming and costly.

“It’s not a realistic tool when you’re doing a study,” said Durland. “If you have a nutrition study, you can’t afford to sample a thousand fish. With these cameras, we can estimate that liver fat to a pretty good accuracy. Then, you can do a lot more samples, and it’s a metric you can actually measure rather than just guess at.”

Using the infrared cameras, the research team photographs the fish’s liver and then takes a sample of it, extracting the lipid with laboratory methods. The team takes the image and constrains the spectra a little and creates a model to predict how much fat is there.

“We’ve done this in fish filets, so we said, let’s see what other parts of the fish we can detect fat in,” said Durland. “And we’ve seen that they’re pretty effective at detecting fat in liver as well as viscera. Through some analytical techniques, we get to a point where we can predict it with 88 percent accuracy and a mean error of less than 2 percent.”

There’s an opportunity to significantly improve a lot of the things we do through automation, computers and hyperspectral imagery.

Dialing in feeds

So far, Durland said there’s been “a lot of interest from industry” in using infrared cameras for detecting visceral fat, especially as it relates to feed efficiency.

“We’ve bred them to grow fast, but the next frontier is feed efficiency – a trait that we’d really like to improve in these stocks,” said Durland. “One of the challenges is the efficiency with which they eat and turn that feed into meat. When you’re harvesting a set of fish, part of that calculation has to be how much fat they have put on in their viscera.”

Right now, Durland said the existing tools “just aren’t suitable” for large-scale trials. The trait can be measured in a laboratory, but it “costs too much and takes too long.” There are visual metrics – whereby people eyeball the color and “guesstimate” the coverage of fat on the viscera – but with varying degrees of accuracy. Techniques that yield more accurate results involve weighing the fish, taking lab samples and calculating the percentage of fat, but they can be slow, messy and expensive. Using infrared cameras could improve the process on all counts.

“With IR (infrared) cameras, we can train models to detect fat and map it onto the guts,” said Durland. “We then calculate the percentage coverage, and taking weight of the viscera into account, calculate total fat. That one has more room to run because we can automatically calculate – highly accurately – but we can do it fast. For breeders, that’s the big interest.”

Mucus health in farmed fish is especially challenging to measure, but as the first line of defense, it can be a trait to predict how the fish might respond to pathogens and parasites. The research team is studying whether using remote sensors to assess mucus health in farmed fish could make detection easier.

“You can scrape it and bring it into the lab, but again, it’s expensive and takes a long time,” Durland said. “Our idea is to develop some basic sensors. If you could take a micro pH probe and just rest it on the surface of a salmon and get a pH reading, maybe that would inform you in some way what’s happening with that fish.”

As the project enters its second year, the focus of the research team is on advancing “good models” by getting “more fish, different ages, different environments to produce something that we can pass on to the research community or industry.”

“The technology for salmon is unparalleled, and we still have a very poor understanding of welfare,” said Durland. “These tools have the most potential to advance how we’re assessing health. I’m not criticizing the work anyone else has done, because it’s a very difficult thing and it takes a lot of work to understand the health status of fish. But there’s an opportunity to significantly improve a lot of the things we do through automation, computers and hyperspectral imagery.”

Follow the Advocate on Twitter @GSA_Advocate

Now that you've reached the end of the article ...

… please consider supporting GSA’s mission to advance responsible seafood practices through education, advocacy and third-party assurances. The Advocate aims to document the evolution of responsible seafood practices and share the expansive knowledge of our vast network of contributors.

By becoming a Global Seafood Alliance member, you’re ensuring that all of the pre-competitive work we do through member benefits, resources and events can continue. Individual membership costs just $50 a year.

Not a GSA member? Join us.

Author

-

Lisa Jackson

Associate Editor Lisa Jackson is a writer who lives on the lands of the Anishinaabe and Haudenosaunee nations in Dish with One Spoon territory and covers a range of food and environmental issues. Her work has been featured in Al Jazeera News, The Globe & Mail and The Toronto Star.

Tagged With

Related Posts

Health & Welfare

‘The right thing to do’: How aquaculture is innovating to reduce fish stress and improve animal welfare

With research showing that stress can damage meat quality, fish and shrimp farmers are weighing the latest animal welfare solutions.

Health & Welfare

Study outlines ways to improve welfare and production of five farmed fish species in Europe

The Aquaculture Advisory Council released a report on how to improve the welfare and production of sea bass, sea bream, salmon, trout and carp.

Health & Welfare

EU launches $5M aquaculture research project to enhance farmed fish health and welfare

Horizon Europe has launched a new aquaculture research project to improve farmed fish health and welfare in Europe.

Health & Welfare

Aquaculture research project secures funding to improve farmed fish welfare in Thailand and Vietnam

The University of Stirling has secured funding to improve farmed fish welfare in Thailand and Vietnam, with the aim to enhance fish quality.